.png)

Quick Recap: The Hallucination Challenge

Korbit’s GenAI code reviewer analyzes pull requests containing tens of thousands of lines of code, surfacing thousands of potential issues ranging from minor bugs to critical security vulnerabilities.

As we discussed in Part I and Part II, one significant obstacle in this process is hallucinations - issues flagged by LLMs that either don't actually exist or simply can't be addressed by developers. Such false positives create a lot of unnecessary noise and frustration for engineering teams.

Previously, we explored how we tackled the hallucination problem by developing a robust classification method using Chain-of-Thought (CoT) prompting. This initial solution, employing GPT-4 and successfully detected around 45% of hallucinations in our test dataset - a promising first step. But at Korbit, "good enough" isn't our standard. We suspected there was still significant room for improvement.

Leveraging Insights from Multiple Models

While analyzing misclassifications and experimenting with various prompts, we noticed something intriguing: Many hallucinations missed by GPT-4 were correctly flagged as hallucinations by another model, Claude 3.5 Sonnet - even using the exact same prompt designed for GPT-4.

This insight led us to consider whether combining GPT-4 and Claude 3.5 into a single ensemble could yield even better results.

Testing Simple Ensemble Approaches

We decided to test the simplest ensemble strategies possible, to quickly validate whether our intuition held:

- The "OR Ensemble": If either GPT-4 or Claude 3.5 flags an issue as a hallucination, we classify it as a hallucination.

- The "AND Ensemble": We classify an issue as a hallucination only if both GPT-4 and Claude 3.5 agree.

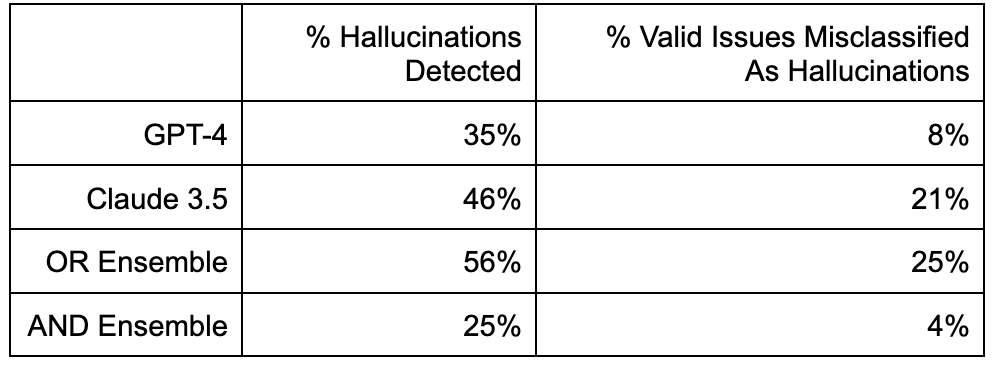

Here's how these approaches performed on our test dataset:

Table 1. Performance Evaluation

The OR Ensemble maximized recall, detecting 56% of all hallucinations—but at the cost of higher noise, mistakenly classifying 25% of valid issues as hallucinations (false positives).

Conversely, the AND Ensemble had exceptional precision, rarely misclassifying valid issues, but it was overly conservative, catching only 25% of all hallucinations.

Digging Deeper into Misclassifications

When we examined the OR Ensemble’s misclassifications closely, we identified two clear categories:

- Lack of sufficient context: The models simply didn’t have access to necessary information (e.g., a related file in the codebase), forcing it into uncertain judgments.

- Borderline or minor stylistic nitpicks: These were trivial, subjective suggestions about coding style or minor refactoring—issues that provided little real-world value and frequently annoyed developers.

Given feedback from our customers, it became clear that our primary focus should be solving for the first category - hallucinations due to missing context. Minor stylistic nitpicks could safely be deprioritized, as these often just contributed to unnecessary noise.

We thought hard about this and decided that we did not overly care about the second type of mistake related to nitpicks and borderline issues. These issues tend to add zero or little value to customers - customers, who by the way, were already telling us that our product was surfacing too many issues and wanted us to reduce the noise level.

So we decided to focus on the errors caused due to a lack of context - specifically the case where the LLM needed access to other source code files from the codebase.

We had an important signal to help us here. Recall from our Part II blog post that the LLM outputs either “Valid Issue”, “Hallucination” or “Undetermined”. The last label “Undetermined” is a very strong signal that the LLM requires access to additional context to make an accurate assessment.

So we asked ourselves: What if in the “Undetermined” cases, we asked the LLM what other file it required to evaluate the issue and then re-evaluated the issue with this context?

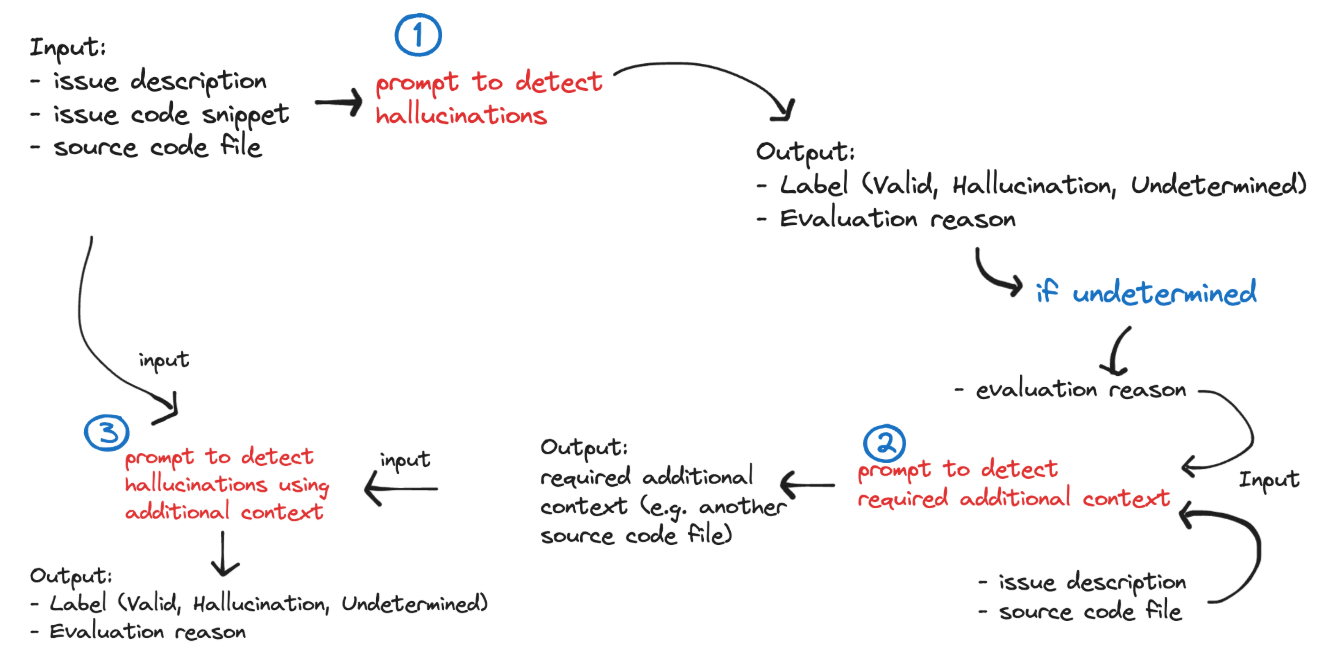

This led us to build a Multi-Hop Ensemble System:

- Step 1. Evaluate issue with GPT-4 and Claude 3.5 Sonnet:

- If any of the LLMs output “Undetermined”, then go to Step 2.

- Otherwise, if any of the LLMs output “Hallucination” then assign the label “Hallucination”,

- Otherwise, assign the label “Valid Issue”.

- Step 2. Query LLM to request a file path containing additional relevant context:

- The LLM which previously outputted “Undetermined” is queried here.

- The LLM must output a JSON object containing a file path, which is then matched using a string matching algorithm to an actual file in the repository.

- If the LLM outputs an invalid or empty path, assign the label “Valid Issue” to avoid incorrectly filtering out valid issues. Otherwise, go to Step 3.

- Step 3. Re-evaluate the issue by providing the additional file content to GPT-4 and Claude 3.5 Sonnet and asking them to re-evaluate it:

- If any of the LLMs output “Hallucination”, then assign the label “Hallucination”,

- Otherwise, assign the label “Valid Issue”.

The system is illustrated in the following flow diagram:

Figure 1. Hallucination Detection System

This system dramatically increased the performance of the system (in particular, recall of hallucinations) as we’ll show later. We experimented with further variants of this system, including increasing the number of hops to provide additional file context to the LLM, but the best performing system remained the three-hop system. As is often the case, adding too much context to an LLM can often decrease performance - especially if most of it is irrelevant!

Example: Detecting A Hallucinated Bug In LangChain

Let’s walk through a real-world example of how the system detected and removed a hallucination in a popular open-source repository.

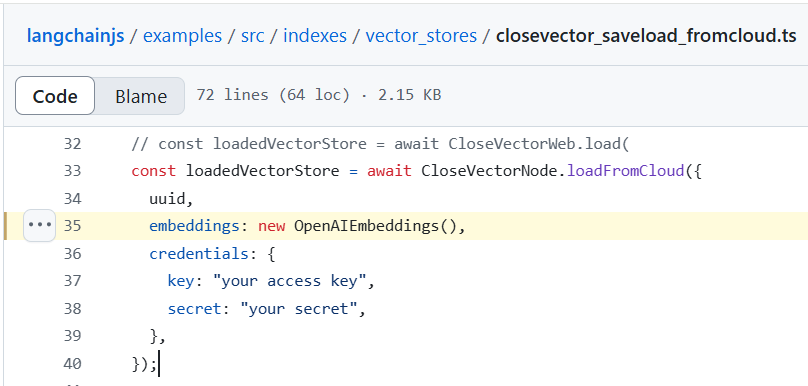

Here’s an example of a LangChain PR we scanned in our benchmark:

https://github.com/langchain-ai/langchainjs/pull/2832

In this PR, Korbit detected the following potential issue which turned out to be a hallucination:

Table 2. Issue Description

OpenAIEmbeddings is instantiated without an API key, which will cause runtime failures.

Figure 2. Screenshot of LangChain repository code

This is a hallucination because the initialization function for OpenAIEmbeddings manages the authentication key. So there is not going to be any runtime errors. We know that, but the model doesn’t know that. Showing to the developer wastes their time and creates frustration.

This issue (along with all other issues detected in the PR) was given to the Hallucination Detector. As a first step, it pulled current file content where the issue was found and then used Chain of Thought to query both GPT-4o and Claude 3.5 to evaluate the issue.

Let’s take a look at the outputs of GPT-4o for the first step of the algorithm:

Table 3. GPT-4o Output: 1st Step

{

"question_1": "Is the instantiation of OpenAIEmbeddings done without providing an API key in any of the code instances?",

"answer_1": "Yes, the instantiation of OpenAIEmbeddings in multiple places like 'new OpenAIEmbeddings()' does not show any API key being provided.",

"question_2": "Does OpenAIEmbeddings require an API key to function properly at runtime?",

"answer_2": "Typically, services like OpenAI require API keys for authentication to access their services.",

"question_3": "Is there any indication in the documentation or comments that providing an API key is necessary for OpenAIEmbeddings?",

"answer_3": "The provided snippets do not explicitly mention the need to include an API key for OpenAIEmbeddings.",

"evaluation": "Valid Issue",

"evaluation_reason": "The instantiation of OpenAIEmbeddings without an API key is likely to cause runtime issues as these services generally require authentication via API keys."

}

Notice how the model first correctly asks about the API and concludes that it’s not given anywhere. If you read the source code you’ll see that it’s indeed not given anywhere in that file. It then reasons around whether API keys are necessary typically, and again that they are typically needed.

Finally, it concludes “The instantiation of OpenAIEmbeddings without an API key is likely to cause runtime issues as these services generally require authentication via API keys.”. If all the model could see is the current file content, then that conclusion seems reasonable enough although ultimately it will prove to be incorrect since the API key is set elsewhere. So if we had only queried GPT-4o, the evaluation would have been that this issue is valid and it would have been shown to the developer - causing frustration and pain. Fortunately, the system is an ensemble system with multiple checks and balances to handle exactly this type of situation.

Next let’s take a look at the output from Claude 3.5:

Table 4. Claude 3.5 Output: 1st Step

{

"question_1": "Is OpenAIEmbeddings instantiated without an API key in the code?",

"answer_1": "Yes, OpenAIEmbeddings is instantiated without an explicit API key in the code snippet provided.",

"question_2": "Are there any other configurations or environment variables that might provide the API key?",

"answer_2": "The code doesn't show any environment variable setup or other configurations for the API key. However, it's possible that the API key is set up elsewhere in the project or environment, which we can't determine from this snippet alone.",

"question_3": "Does the code show any signs of runtime failures due to missing API key?",

"answer_3": "The code doesn't show any error handling or signs of runtime failures. It appears to use the OpenAIEmbeddings instance without any issues in multiple places.",

"evaluation": "Undetermined",

"evaluation_reason": "While it's true that the OpenAIEmbeddings is instantiated without an explicit API key in the visible code, we can't definitively say this will cause runtime failures. The API key might be set up in the environment or elsewhere in the project. Additionally, the code doesn't show any signs of failing due to a missing API key. Without more context about the project setup and runtime behavior, we can't conclusively determine if this is a valid issue or a hallucination."

}

The Chain of Thought reasoning given by Claude 3.5 is similar to GPT-4o for the first part, but then diverges at the end where it asks "Does the code show any signs of runtime failures due to missing API key?". It reasons and answers its own question by saying “It appears to use the OpenAIEmbeddings instance without any issues in multiple places.". So the fact that it observes the same pattern multiple times in the codebase leads it to think that this is likely not a runtime issue - although it cannot know this for certain. Finally, it concludes that the issue is “Undetermined”, which is really the best the system could have done given its limited context.

This in turn triggers the next step of the algorithm to go find additional context in the rest of the codebase. At any time, if either model classifies an issue as “Undetermined”, the Hallucination Detector will move on to the 2nd phase of grabbing additional context from other files and re-evaluating the given issue. Better safe than sorry!

The Hallucination Detector queries Claude 3.5 to identify additional context outputting:

Table 5. Claude 3.5 Output: 2nd Step

{

"class_name": "OpenAIEmbeddings",

"file_name": "openai.ts",

"file_path": "langchain/src/embeddings/openai.ts"

}

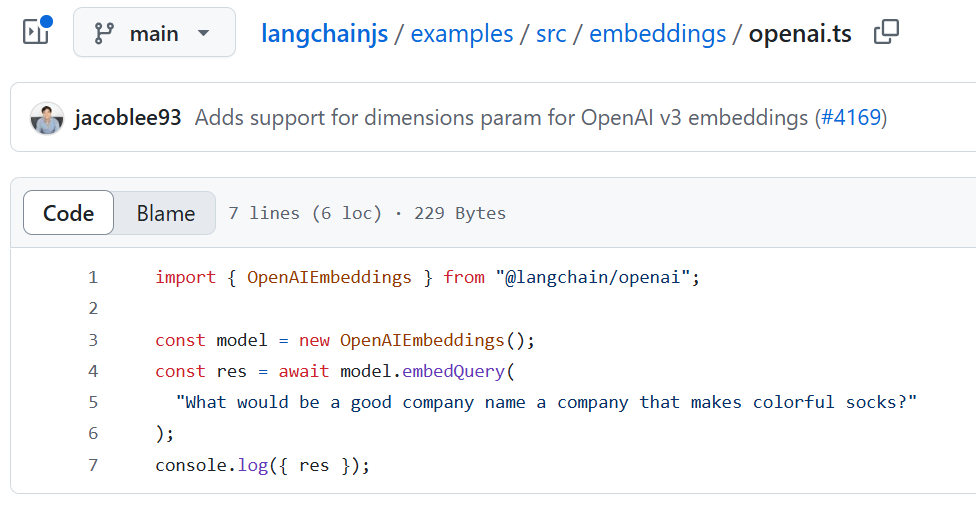

This gets matched to the file examples/src/embeddings/openai.ts, which shows another example of how to initialize OpenAIEmbeddings in the codebase:

Figure 3. File src/embeddings/openai.ts

The file doesn’t show where the API gets defined, but it provides further evidence to the LLMs showing that the typical approach is to do an initialization with no API keys provided.

Finally, this new file’s content is given as an input to both GPT-4o and Claude 3.5 to re-evaluate the issue. Here’s what GPT-4o responded with:

Table 6. GPT-4o Output: 3rd Step

{

"question_1": "Is there any evidence in the code that the OpenAIEmbeddings class requires an API key during instantiation?",

"answer_1": "No, the provided code does not show any requirement or parameter for an API key when instantiating OpenAIEmbeddings.",

"question_2": "Is the OpenAIEmbeddings instance used in a way that requires an API key later in the code?",

"answer_2": "No, the usage of OpenAIEmbeddings in the code does not indicate any requirement for an API key. The credentials are used while saving or loading the vector store to or from the cloud, not while creating the instance of OpenAIEmbeddings.",

"question_3": "Does the OpenAIEmbeddings class initialization parameter match any other code context indicating an issue for not providing an API key?",

"answer_3": "No, there are no indications in the provided code context that initializing OpenAIEmbeddings without an API key is problematic or will cause runtime failures.",

"evaluation": "Hallucination"

}

The model correctly concluded that “... there are no indications in the provided code context that initializing OpenAIEmbeddings without an API key is problematic or will cause runtime failures”. Therefore, it then moved on to classify the original issue as a “Hallucination”.

This output is already sufficient to conclude that the issue is a hallucination and to remove it. However, let’s also take a look at the output from Claude 3.5 here:

Table 7. Claude 3.5 Output: 3rd Step

{

"question_1": "Is OpenAIEmbeddings instantiated in the code without providing an API key?",

"answer_1": "Yes, OpenAIEmbeddings is instantiated without an explicit API key in the code snippets shown.",

"question_2": "Are there any other ways to provide the API key that might not be visible in this code snippet?",

"answer_2": "Yes, there could be other ways to provide the API key, such as environment variables or configuration files, which are not visible in this code snippet.",

"question_3": "Does the code show any signs of error handling or configuration that might suggest the API key is being managed elsewhere?",

"answer_3": "No, the code doesn't show explicit error handling or configuration related to the OpenAI API key. However, the code does include credential management for CloseVector, which suggests that API keys might be managed in a similar way for OpenAI.",

"evaluation": "Undetermined"

}

Claude 3.5 reviewed the additional code provided and concluded again that the issue is “Undetermined”. That would have also been sufficient to either remove or at least suppress the issue from being shown to the developer.

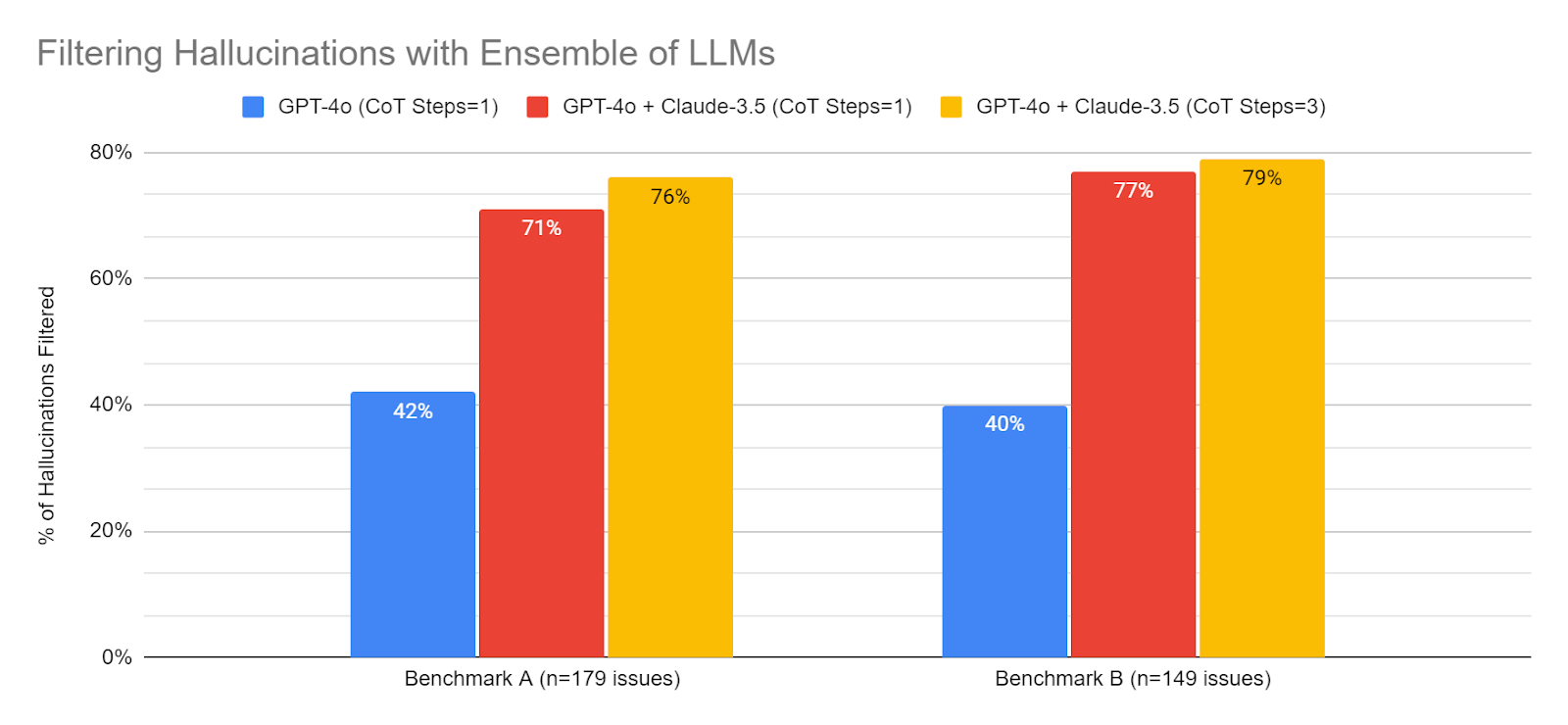

Ensemble System Detects Almost 80% of Hallucinations

The following shows results for two benchmark test datasets (n=328 issues in total)

Table 8. Performance on Two Benchmark Test Datasets (n=328 issues in total)

As can be seen from the results, by combining the CoT technique with an Ensemble and Multi-Hop System, we are now able to detect almost 80% of all hallucinations. This was a huge win for us, because it dramatically reduced the problem of hallucinations in the system - thereby reducing frustration and pain caused to our many customers and developers using Korbit.

Furthermore, we manually reviewed the few valid issues that were incorrectly labelled as “hallucination” (i.e. false positives) by the system. The majority of these issues were minor nitpicks and borderline issues, which we believed would not have a significant negative impact on value to customers - indeed many customers would probably prefer to mute such issues.

Building this system required weeks of work involving reviewing and annotating issues, collecting datasets, and system and prompt engineering followed by the final implementation into our production system - but it resulted in a big win for us and our customers!